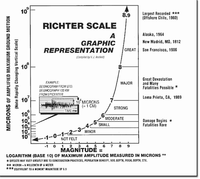

The Richter magnitude scale assigns a magnitude number to quantify the energy released by an earthquake. The Richter scale is a base-10 logarithmic scale, which defines magnitude as the logarithm of the ratio of the amplitude of the seismic waves to an arbitrary, minor amplitude.

Development

In 1935, the seismologists Charles Francis Richter and Beno Gutenberg, of the California Institute of Technology, developed the (future) Richter magnitude scale, specifically for measuring earthquakes in a given area of study in California, as recorded and measured with the Wood-Anderson torsion seismograph. Originally, Richter reported mathematical values to the nearest quarter of a unit, but the values later were reported with one decimal place; the local magnitude scale compared the magnitudes of different earthquakes. Richter derived his earthquake-magnitude scale from the apparent magnitude scale used to measure the brightness of stars.

Richter established a magnitude 0 event to be an earthquake that would show a maximum, combined horizontal displacement of 1.0 µm (0.00004 in.) on a seismogram recorded with a Wood-Anderson torsion seismograph 100 km (62 mi.) from the earthquake epicenter. That fixed measure was chosen to avoid negative values for magnitude, given that the slightest earthquakes that could be recorded and located at the time were around magnitude 3.0. However, the Richter magnitude scale itself has no lower limit, and contemporary seismometers can register, record, and measure earthquakes with negative magnitudes.